Limitations to scaling Ethereum

Ethereum’s launch was a ground-breaking change in the blockchain ecosystem, truly expanding the possibilities of computations that can be performed in a deterministic and integral manner. However, as much as it was an evolution it was also the byproduct of the inspiration of Bitcoin. The simple concept that blocks could hold data in a permanent and immutable form trusted by any user was as endearing an objective as a new technology could hope for. Many great minds only saw the limitations though and have been striving since to upgrade Ethereum to reach new heights.

These limitations have often come in the form of a throttled capacity for transactional output. More so than any other factors are the practical limitations of how much data can be contained in a single block before processing it is beyond the computational capability of the average user. Initially rollups, otherwise known as L2s, were the key allowing for users to execute transactions off-chain before sending proof and any data needed to verify the proof to be stored with a block. These technologies are now well established and stable but they are only an intermediate step towards the end-goals of >100,000 transactions/second.

Behind the hypothesis of EIP-4844 is the firm view that the data that is provided with each block, to verify the proof that transactions have been executed correctly off-chain, doesn’t need to be stored permanently. If data was instead pruned when it could be accepted that a sufficient number of verifiers had ascertained the authenticity of the original proof, then the data-storage requirements of users would decrease significantly. This enables larger packets of data (the result of more transactions being executed within a single proof) to be stored with blocks, increasing the transactional throughput of Ethereum.

What will 'proto-danksharding' change?

EIP-4844 intends to provide Ethereum with ‘proto-danksharding’; an intermediate step towards the aforementioned more efficient rollup proof system. While it will put in place most of the data-types, functions and settings required to implement ‘danksharding’, the end goal, it itself will not be the final product capable of reaching >100,000 transactions/second. Data will be pruned from each block after an extended period of time has passed, but the size of this data will still be limited to such an extent that transactional throughput may not immediately increase to a significant degree. Instead the greatest impact for users of Ethereum immediately will be seen in reduced gas fees. As data-storage in this new temporary manner will be more efficient than the currently existing avenues for data-storage on-chain and the costs required to execute transactions on rollups will decrease.

Beyond the broad strokes of what is technologically possible given these changes, there is much to be said for the potential practical and observable events. Assuming gas fees on L2s do decrease dramatically as expected there will be services and business models that can be deployed more effectively. Social networks and games have been two services that have thus far been financially non-viable in more advanced manners; a Facebook clone for instance would require many transactions per active user per second. However, as transactions approach a cost that potentially makes it comparable to operating a database/server post-EIP-4844 then it would certainly become viable. Likewise, many existing businesses such as staking pools may be willing to “accept” minuscule transaction fees in allowing users to conduct fee-free operations.

What is the status of EIP-4844?

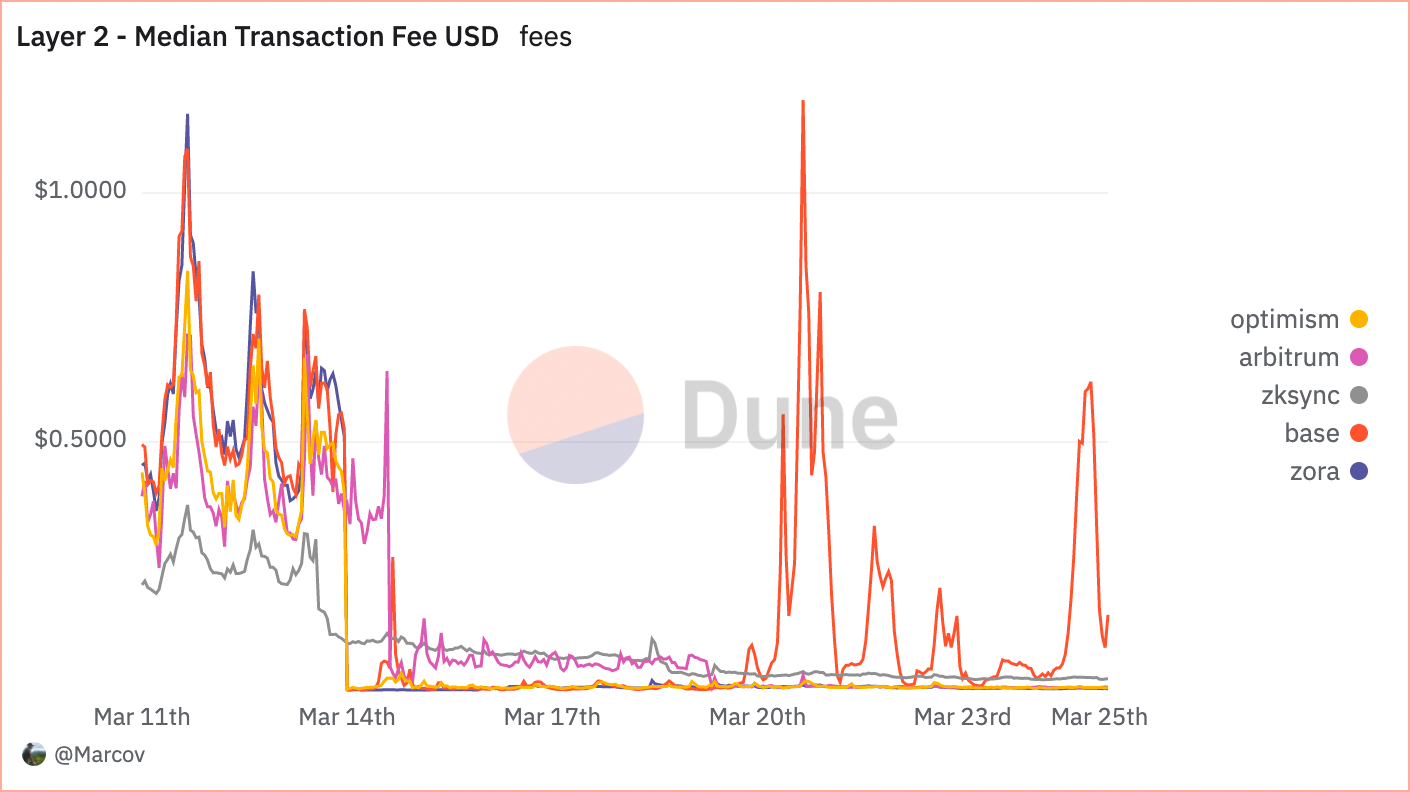

It was implemented as part of the Dencun hard-fork to Ethereum on March 13, 2024. There appear to be early signs of a dip in average gas fees - though the sporadic nature of gas fees means few summaries can be considered conclusive at this early stage.

How is EIP-4844 changing Ethereum?

Considering the short time that has passed since the launch of Dencun few conclusions can be reported yet. Referring to the chart below it is clear that gas fees on L2 services have decreased as would have been hypothesised as a result of EIP-4844. What is less clear though is if there is a floor to these effects or if increasing demand is in response to a decrease in gas fees. Regardless of further observations it appears that the chief objective stated has been achieved: gas fees have decreased for the current levels of demand and the foundations are laid for ‘danksharding’.

The above data is provided by this invaluable resource.

The above data is provided by this invaluable resource.

Following the hard-fork, implemented late on March 13th, there was an almost immediate and dramatic decrease in gas fees across all L2 services with the exception of Arbitrum (which eventually saw the same effect ~16 hours later) and zkSync (which is intended to adopt the changes in May). While a majority of intended effects will be reflected across L2 services, EIP-4844 may also drive decreases in gas fees across the L1. This particular effect hasn’t been noticed yet at publishing time but could occur as demand moves away from L1 to cheaper transactions on L2s.

What will 'danksharding’ change?

The endgame that EIP-4844 is building to ‘danksharding’ is in all likelihood years away from implementation. ‘Proto-danksharding’ is only one step in the process. Eventually, it is intended that the temporary data stored with blocks on-chain to verify proofs of the execution of transactions on rollups will be much larger in scale. Therefore significantly more transactions would be able to be executed off-chain at once increasing transactional throughput. To allow for this to be a possibility the computational requirements of average users must be carefully managed to avoid the network becoming too centralised.

Currently, it is believed this management will come through the form of two core changes; proposer-builder separation and data-availability sampling. Proposer-builder separation is an important topic that could never be covered in detail within this article but an upcoming article will be published dedicated to this subject matter. Essentially it is expected that two new roles will be brought into existence called block-builders and block-proposers. Block-builders will be a highly specialised role intended for those with significant computational resources for generating large proofs. This is because the large sections of temporary data being stored with blocks will require large resources to generate proofs. In addition, these large sections of temporary data will require methodologies to ensure they can be verified without reading all data together to make the network more efficient. This is where data-availability sampling will allow verification to be performed from smaller subsets of the overall data.